BIOMETRIC AUTHENTICATION SYSTEM

BIOMETRIC AUTHENTICATION SYSTEM

A biometric authentication system provides automatic identification of an individual based on a unique feature or characteristic possessed by the individual. Iris recognition is regarded as the most reliable and accurate biometric authentication system available. Most commercial iris recognition systems use patented algorithms developed by Daugman, and these algorithms are able to produce perfect recognition rates. However, published results have usually been produced under favorable conditions, and there have been no independent trials of the technology. The paper involves the development of an iris recognition system in order to verify both the uniqueness of the human iris and also its performance as a biometric even at abnormal conditions. For determining the recognition performance of the system two digitized grayscale eye images are used. The iris recognition system consists of an automatic segmentation system that is based on the Hough transform, and is able to localize the circular iris and pupil region, occluding eyelids and eyelashes, and reflections. The extracted iris region is then normalized into a rectangular block with constant dimensions to account for imaging inconsistencies. Finally, the phase data from 1D Log-Gabor filters is extracted and quantised to encode the unique pattern of the iris into a bitwise biometric template. The Hamming distance is employed for classification of iris templates, and two templates are found to match if a test of statistical independence is failed. Based on Hamming distance the threshold is set between false acceptance and false rejection. Therefore, iris recognition is shown to be a reliable and accurate biometric technology.

INTRODUCTION

BIOMETRIC TECHNOLOGY:

A biometric system provides automatic recognition of an individual based on some sort of unique feature or characteristic possessed by the individual. Biometric systems have been developed based on fingerprints, facial features, voice, hand geometry, handwriting, the retina, and the one presented in this thesis, the iris. Biometric systems work by first capturing a sample of the feature, such as recording a digital sound signal for voice recognition, or taking a digital colour image for face recognition. The sample is then transformed using some sort of mathematical function into a biometric template. The biometric template will provide a normalized, efficient and highly discriminating representation of the feature, which can then be objectively compared with other templates in order to determine identity. Most biometric systems allow two modes of operation. An enrolment mode for adding templates to a database, and an identification mode, where a template is created for an Individual and then a match is searched for in the database of pre-enrolled templates. A good biometric is characterized by use of a feature that is highly unique, therefore the chance of any two people having the same characteristic will be minimal stable, so that the feature does not change over time, and be easily captured in order to provide convenience to the user, and prevent misrepresentation of the feature.

THE HUMAN IRIS

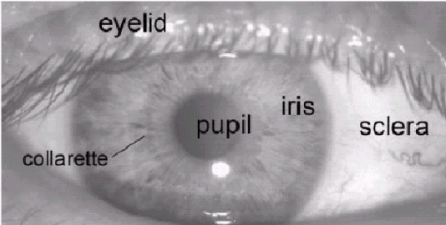

The iris is a thin circular diaphragm, which lies between the cornea and the lens of the human eye. A front-on view of the iris is shown in Fig.1. The iris is perforated close to its centre by a circular aperture known as the pupil. The function of the iris is to control the amount of light entering through the pupil, and this is done by the sphincter and the dilator muscles, which adjust the size of the pupil. The average diameter of the iris is 12 mm, and the pupil size can vary from 10% to 80% of the iris diameter. The iris consists of a number of layers, the lowest being the epithelium layer, which contains dense pigmentation cells. The stromal layer lies above the epithelium layer, and contains blood vessels, pigment cells and the two iris muscles. The density of stromal pigmentation determines the colour of the iris. The externally visible surface of the multilayered iris contains two zones, which often differ in colour.

They are an outer ciliary zone and an inner pupillary zone, and these two zones are divided by the collarette, which appears as a zigzag pattern. Formation of the iris begins during the third month of embryonic life. The unique pattern on the surface of the iris is formed during the first year of life, and pigmentation of the stroma takes place for the first few years. Formation of the unique patterns of the iris is random and not related to any genetic factors. The only characteristic that is dependent on genetics is the pigmentation of the iris, which determines its color. Due to the epigenetic nature of iris patterns, the two eyes of an individual contain completely independent iris patterns, and identical twins possess uncorrelated iris patterns, and identical twins possess uncorrelated iris patterns.

IRIS RECOGNITION

The iris is an externally visible, yet protected organ whose unique epigenetic pattern remains stable throughout adult life. This characteristic makes it very attractive for use as a biometric for identifying individuals. Image processing

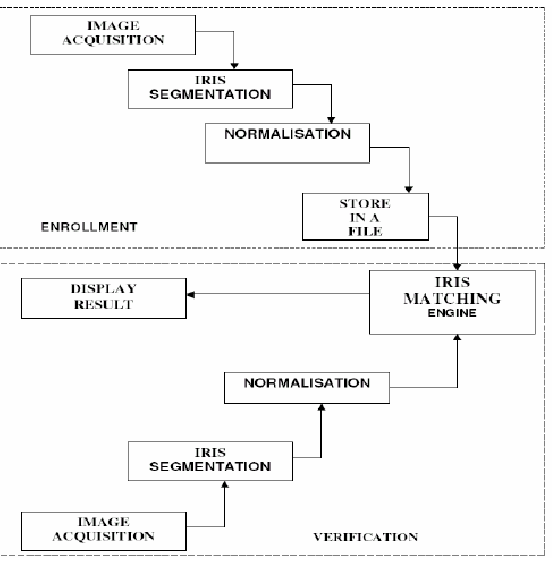

Fig.1: A Front-on-view on the human eye techniques can be employed to extract the unique iris pattern from a digitized image of the eye, and encode it into a biometric template, which can be stored in a database. This biometric template contains an objective mathematical representation of the unique information stored in the iris, and allows comparisons to be made between templates. When a person is to be identified by an iris recognition system, his eye is first photographed, and then a template created forthe iris region. This template is then compared with the other templates stored in a data base until a matching template is found and the person is identified. If no match is found the person remains unidentified. The Daugman system has been tested under numerous studies, all reporting a zero failure rate. The Daugman system is claimed to be able to perfectly identify an individual, given millions of possibilities. Fig: 2: Block Diagram of Iris Recognition System.

However, there have been no independent trials of the technology, and source code for systems is not available. Also, there is a lack of publicly available datasets for testing and research, and the test results published have usually been produced using carefully imaged irises under favorable conditions.

SEGMENTATION

The first stage of iris recognition is to isolate the actual iris region in a digital eye image. Two circles can approximate the iris region, shown in Fig.3, one for the iris/sclera boundary and another, for the iris/pupil boundary (interior to the first). The eyelids and eyelashes normally occlude the upper and lower parts of the iris region. Also, specular reflections can occur within the iris region corrupting the iris pattern. A technique is required to isolate and exclude these artifacts as well as to locate the circular iris region. The success of segmentation depends on the imaging quality of eye images. Also, persons with darkly pigmented irises will present very low contrast between the pupil and iris region if imaged under natural light, making segmentation more difficult. The segmentation stage is critical to the success of an iris recognition system, since data that is falsely represented, as iris pattern data will corrupt the biometric templates generated, resulting in poor recognition rates.

IMPLEMENTATION

Here the circular Hough transform was used for detecting the iris and pupil boundaries. This involves employing Canny edge detection to generate an edge map. Gradients were biased in the vertical direction for the outer iris/sclera boundary. Vertical and horizontal gradients were weighted equally for the inner iris/pupil boundary. The range of radius values to search for was set manually, values of the iris radius range from 90 to 150 pixels, while the pupil radius ranges from 28 to 75 pixels. In order to make the circle detection process more efficient and accurate, the Hough transform for the iris/sclera boundary was performed first, then the Hough transform for the iris/pupil boundary was performed within the iris region, instead of the whole eye region, since the pupil is always within the iris region. After this process was complete, six parameters are stored, the radius, and x & y center coordinates for both circles. A line is fit to the upper and lower eyelid using the linear Hough transform isolated eyelids. Then a second horizontal line is drawn, which intersects with the first line at the iris edge that is closest to the pupil. This process is illustrated in Fig.3 and is done for both the top and bottom eyelids. The second horizontal line allows maximum isolation of eyelid regions. Canny edge detection is used to create an edge map, and only horizontal gradient information is taken. The linear Hough transform is implemented using the MATLAB Radon transform, which is a form of the Hough transform. If the maximum in the Hough space is lower than a set threshold, then no line is fitted, since this corresponds to non-occluding eyelids.

Also, the lines are restricted to lie exterior to the pupil region, and interior to the iris region. A linear Hough transform has the advantage over its parabolic version, because it has lesser parameters to deduce, making the process lesser computationally demanding.

Fig.3 Stages of segmentation with eye image.

For isolating eyelashes, a simple thresholding technique was used, since analysis reveals that eyelashes are quite dark when compared to the rest of the eye image. Although, the eyelashes are quite dark compared with the surrounding eyelid region, areas of the iris region are equally dark due to the imaging conditions. Therefore thresholding to isolate eyelashes would also remove important iris region features, making this technique infeasible.

NORMALISATION

Once the iris region is successfully segmented from an eye image, the next step is to transform the iris region so that it has fixed dimensions in order to allow comparisons. The dimensional inconsistencies between eye images are mainly due to the stretching of the iris caused by pupil dilation from varying levels of illumination. Other sources of inconsistency include, varying imaging distance, rotation of the camera, head tilt, and rotation of the eye within the eye socket. The normalisation process will produce iris regions, which have the same constant dimensions, so that two photographs of the same iris under different conditions will have characteristic features at the same spatial location.

IMPLEMENTATION

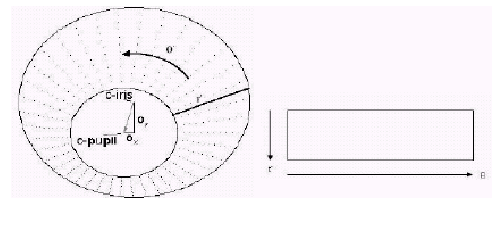

For normalisation of iris regions, a technique based on Daugman’s rubber sheet model is employed. The centre of the pupil is considered as the reference point, and radial vectors pass through the iris region, as shown in Fig. 4. A number of data points are selected along each radial line and this is defined as the radial resolution. The number of radial lines going around the iris region is defined as the angular resolution. Since the pupil can be non-concentric to the iris, a remapping formula is needed to rescale points depending on the angle around the circle.

This is given by

With

where displacement of center of the pupil relative to center of the iris is given by Qx, Qy and r2 the distance between the edge of the pupil and edge of the iris at an angle, q around the region, and r1 is the radius of the iris. The remapping formula gives the radius of the iris region as a function of the angle q same number of points are chosen along each radial line, irrespective of how narrow or wide the radius is at a particular angle. Backtracking to find the Cartesian coordinates of data points from the radial and angular position in the normalized pattern creates the normalized pattern. From the iris region, normalization produces a 2D array with horizontal dimensions of angular resolution and vertical dimensions of radial resolution.

Another 2D array is created for marking reflections, eyelashes, and eyelids detected in the segmentation stage. In order to prevent non-iris region data from corrupting the normalized representation, data points that occur along the pupil border or the iris border are discarded. As in Daugman’s rubber sheet model, removing rotational inconsistencies is performed at the matching stage.

Fig.4. Outline of the Normalisation process.

FEATURE ENCODING AND MATCHING

In order to provide accurate recognition of individuals, the most discriminating information present in an iris pattern must be extracted. Only the significant features of the iris must be encoded so that comparisons between templates can be made. The template that is generated in the feature encoding process will also need a corresponding matching metric, which gives a measure of similarity between two iris templates. This metric should give one range of values known as intra-class comparisons, when comparing templates generated from the same eye, and another range of values, known as inter-class comparisons when comparing templates created from different irises. These two cases should give distinct and separate values, so that a decision can be made with high confidence as to whether two templates are from the same iris, or from two different irises.

IMPLEMENTATION

FEATURE ENCODING

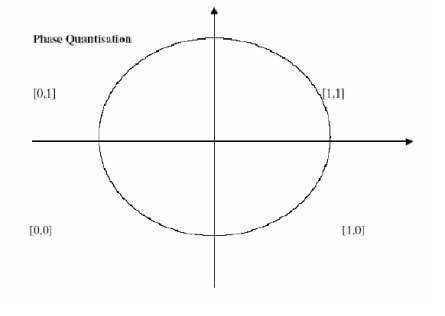

Fig.5. Phase Quantisation |

Feature encoding is implemented by convolving the normalized iris pattern with 1D Log-Gabor wavelets. The 2D normalised pattern is broken up into a number of 1D signals, and then these 1D signals are convolved with 1D Gabor wavelets. The rows of the 2D normalized pattern are taken as the 1D signal in which each row corresponds to a circular ring on the iris region. Since maximum independence occurs in the angular direction, the angular direction is taken rather than the radial one, which corresponds to columns of the normalised pattern. The intensity values at known noise areas in the normalised pattern are set to the average intensity of surrounding pixels to prevent influence of noise in the output of the filtering. The output of filtering is then phase quantised to four levels using the Daugman method , with each filter producing two bits of data for each phasor. The output of phase quantisation is chosen to be a gray code, so that while going from one quadrant to another, only 1 bit changes. This will minimize the number of bits disagreeing, if say two intra-class patterns are slightly misaligned and thus will provide more accurate recognition. The feature encoding process is illustrated in Fig. 6. The encoding process produces a bitwise template containing a number of bits of information, and a corresponding noise mask, which corresponds to corrupt areas within the iris pattern, and marks bits in the template as corrupt. Since the phase information will be meaningless at regions where the amplitude is zero, these regions are also marked in the noise mask. The total number of bits in the template will be product of the angular resolution, the radial resolution, and the number of filters used. The number of filters, their center frequencies and parameters of the modulating Gaussian function in order to achieve the best recognition rate.

Sample Iris Template:

Fig.6: An illustration of the feature encoding process.

MATCHING

For matching, the Hamming distance is chosen as a metric for recognition, since bit-wise comparisons are necessary. The Hamming distance algorithm employed also incorporates noise masking, so that only significant bits are used in calculating the Hamming distance between two iris templates. Now when taking the Hamming distance, only those bits in the iris pattern that correspond to ‘0’ bits in noise masks of both iris patterns will be used in the calculation. The Hamming distance will be calculated using only the bits generated from the true iris region, and this modified Hamming distance formula is given as :

Where Xj and Yj are the two bit-wise templates to be compared, Xnj and Ynj are the corresponding noise masks for Xj and Yj, and N is the number of bits represented by each template. Although, in theory, two iris templates generated from the same iris will have a Hamming distance of 0, in practice this will not occur. Normalisation is not perfect, and also there will be some noise that goes undetected, so that some variation will be present when comparing two intra-class iris templates. In order to account for rotational inconsistencies, when the Hamming distance of two templates is calculated, one template is shifted left and right bitwise and a number of Hamming distance values are calculated from successive shifts. This bit-wise shifting in the horizontal direction corresponds to rotation of the original iris region by an angle given by the angular resolution used. If an angular resolution of 180 degrees is used, each shift will correspond to a rotation of 2 degrees in the iris region. This method is suggested by Daugman, and corrects misalignments in the normalised iris pattern caused by rotational differences during imaging. From the calculated Hamming distance values, only the lowest is taken, since this corresponds to the best match between two templates. The number of bits moved during each shift is given by two times the number of filters used, since each filter will generate two bits of information from one pixel of the normalised region. The actual number of shifts required to normalize rotational inconsistencies will be determined by the maximum angular difference between two images of the same eye. One shift is defined as one shift to the left, followed by one shift to the right. In this example one filter is used to encode the templates, so only two bits are moved during a shift. The lowest Hamming distance, in this case zero, is then used since this corresponds to the best match between the two templates.

CONCLUSION

In this paper an iris recognition system has been presented using grayscale eye images in order to check the desired performance of iris recognition technology. Firstly, an automatic segmentation algorithm was presented, which would localise the iris region from an eye image and isolate eyelid, eyelash and reflection areas. Automatic segmentation was achieved through the use of the circular Hough transform for localizing the iris and pupil regions and the linear Hough transform for localising occluding eyelids.

Thresholding was also employed for isolating eyelashes and reflections. Next, the segmented iris region was normalised to eliminate dimensional inconsistencies between iris regions. This was achieved by implementing a version of Daugman’s rubber sheet model, where the iris is modelled as a flexible rubber sheet, which is unwrapped into a rectangular block with constant polar dimensions. Finally, features of the iris were encoded by convolving the normalised iris region with 1D Log-Gabor filters and phase quantising the output in order to produce a bitwise biometric template. The Hamming distance was chosen as a matching metric, which gave a measure of bits disagreed between two templates. A failure of statistical independence between two templates would result in a match i.e., the two templates were deemed to have been generated from the same iris if the Hamming distance produced was lower than a set Hamming distance.